Eager Learner wrote:Hi, I've heard from a certain well known racing damper manufacturer that has had good results for many years in high level GT racing, that absolutely minimizing hysteresis is not always a good thing and that one of the main reasons people like to reduce hysteresis is that it makes damper simulation much easier if hysteresis is assumed to be 0.

Is there any truth to this statement at all? Or is it just an excuse?

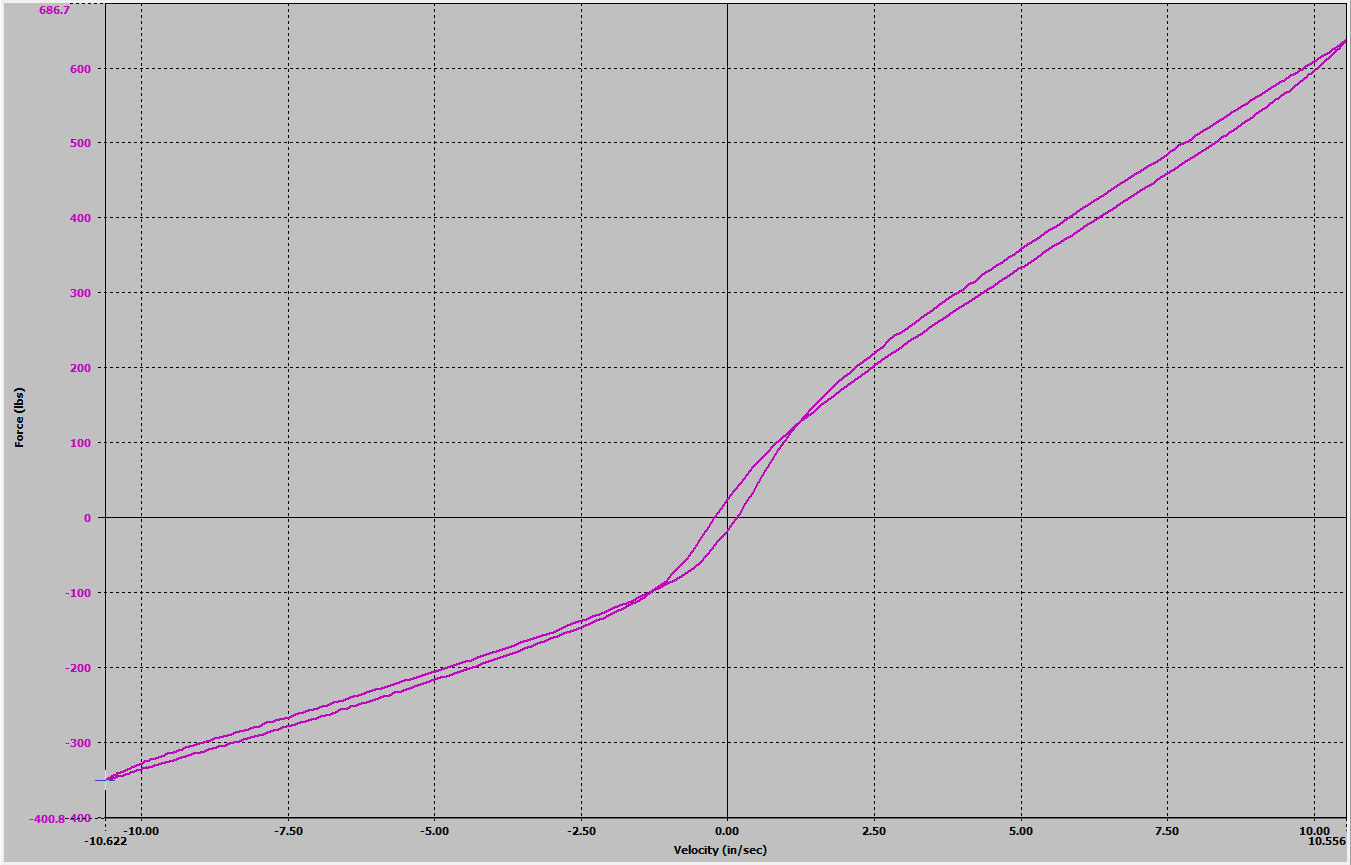

Is a damper without hysteresis easier to model or simulate? Sure. That's common sense - less complexity makes things easier, right? Is a damper with hysteresis "better" than one without? I don't think there's a clear cut answer to that.

As a preface to this next bit, let's just put out there right now - no racecar is ever "optimized." Never the best it can ever be. That's why you have practice sessions every weekend, and why cars get better over the course of a season. You'll never have a 100% complete understanding of everything there is.

Let's say you're a race team, and you've got two options for dampers. One is a pretty simple design - maybe it's linear with little or no hysteresis. The other is more complex, non-linear, with hysteresis. And let's say for argument's sake the more complex one has the most

ideal world potential to be best.

What's going to be the best option for you - the damper with 100% most

potential performance that you only understand 70% of... or the damper with 90% potential that you understand 95% of?

Now maybe your main competitor has a few super shock gurus on their team and they can understand 95% of any option out there. Best choice for them might be different than the best choice for you!

It's like that for a lot of things. Dampers, springs, tires, aerodynamics, kinematics and compliance, etc etc. Many different options on how to approach each of them, and there's no globally best choice. That's what makes engineering so interesting.

Grip is a four letter word. All opinions are my own and not those of current or previous employers.